Data#

In God we trust, all others bring data.

—William Edwards Deming

Data is a broad term that refers to facts, statistics, or information in a raw, unprocessed, or organized form. Data can take many forms, including numbers, text, images, audio recordings, and more.

Data processing#

The process of preparing raw data for machine learning involves several stages of data processing and manipulation to transform it into a structured and suitable format. The most common stages are:

data collection;

data cleaning:

handling missing values;

remove duplicates;

outlier detection;

data type conversions;

data exploration and visualization;

feature engineering.

The result of these manipulation is what is usually called a dataset: a specific collection of data that is organized and structured in a way that makes it suitable for analysis, processing, or machine learning tasks.

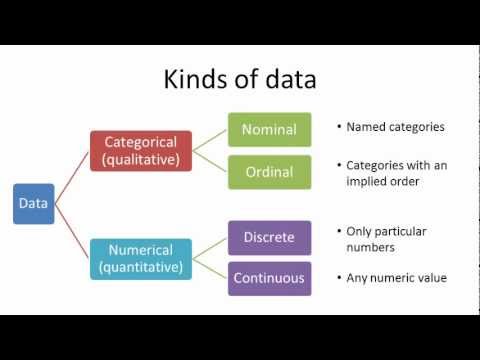

Data types#

Numerical continuous data#

Continuous data can take on any real[1] value within a range and often involves measurements. For instance:

height

temperature

distance

time

Numerical discrete data#

Discrete data consists of distinct, separate values and often involves counts or categorizations, e.g.

number of children

shoe size

test scores

Important

The distiction between continuous and discrete data can be occasionally ambiguous. For example, age in years probably should be considered as a discrete variable. However, if we allow fractional ages, e.g. \(30.2\) years, it becomes a continuous variable.

Categorical nominal variables#

Nominal data consists of categories with no inherent order or ranking. For example:

colors

fruits

gender

countries

Categorical ordinal variables#

Ordinal data includes categories with a meaningful order or ranking. Examples:

education level

customer satisfaction

movie rating

top-10 items suggested by a search engine

Examples of datasets#

There are several way how you can import some famous datasets in Python.

Tip

To install Python library scikit-learn (aka sklearn), run the command pip install scikit-learn

For instance, we can use helpers from sklearn.datasets module.

Iris dataset#

from sklearn.datasets import load_iris

iris_data = load_iris(as_frame=True)

iris_data['data']

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/__init__.py:1193, in check_pandas_support(caller_name)

1192 try:

-> 1193 import pandas # noqa

1195 return pandas

ModuleNotFoundError: No module named 'pandas'

The above exception was the direct cause of the following exception:

ImportError Traceback (most recent call last)

Cell In[2], line 2

1 from sklearn.datasets import load_iris

----> 2 iris_data = load_iris(as_frame=True)

3 iris_data['data']

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/_param_validation.py:214, in validate_params.<locals>.decorator.<locals>.wrapper(*args, **kwargs)

208 try:

209 with config_context(

210 skip_parameter_validation=(

211 prefer_skip_nested_validation or global_skip_validation

212 )

213 ):

--> 214 return func(*args, **kwargs)

215 except InvalidParameterError as e:

216 # When the function is just a wrapper around an estimator, we allow

217 # the function to delegate validation to the estimator, but we replace

218 # the name of the estimator by the name of the function in the error

219 # message to avoid confusion.

220 msg = re.sub(

221 r"parameter of \w+ must be",

222 f"parameter of {func.__qualname__} must be",

223 str(e),

224 )

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/datasets/_base.py:691, in load_iris(return_X_y, as_frame)

687 target_columns = [

688 "target",

689 ]

690 if as_frame:

--> 691 frame, data, target = _convert_data_dataframe(

692 "load_iris", data, target, feature_names, target_columns

693 )

695 if return_X_y:

696 return data, target

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/datasets/_base.py:96, in _convert_data_dataframe(caller_name, data, target, feature_names, target_names, sparse_data)

93 def _convert_data_dataframe(

94 caller_name, data, target, feature_names, target_names, sparse_data=False

95 ):

---> 96 pd = check_pandas_support("{} with as_frame=True".format(caller_name))

97 if not sparse_data:

98 data_df = pd.DataFrame(data, columns=feature_names, copy=False)

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/__init__.py:1197, in check_pandas_support(caller_name)

1195 return pandas

1196 except ImportError as e:

-> 1197 raise ImportError("{} requires pandas.".format(caller_name)) from e

ImportError: load_iris with as_frame=True requires pandas.

This is a tabular dataset. The targets are encoded by digits \(0\), \(1\), \(2\):

iris_data['target'].value_counts()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[3], line 1

----> 1 iris_data['target'].value_counts()

NameError: name 'iris_data' is not defined

What does these values mean?

iris_data.target_names

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[4], line 1

----> 1 iris_data.target_names

NameError: name 'iris_data' is not defined

Here is how they look like in the wild (figure 1.1 from [Murphy, 2022])

setosa |

versicolor |

virginica |

|---|---|---|

|

|

|

MNIST dataset#

A classical dataset of handwritten digits.

from sklearn.datasets import fetch_openml

X, Y = fetch_openml('mnist_784', return_X_y=True, parser='auto')

X.shape, Y.shape

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/__init__.py:1193, in check_pandas_support(caller_name)

1192 try:

-> 1193 import pandas # noqa

1195 return pandas

ModuleNotFoundError: No module named 'pandas'

The above exception was the direct cause of the following exception:

ImportError Traceback (most recent call last)

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/datasets/_openml.py:1043, in fetch_openml(name, version, data_id, data_home, target_column, cache, return_X_y, as_frame, n_retries, delay, parser, read_csv_kwargs)

1042 try:

-> 1043 check_pandas_support("`fetch_openml`")

1044 except ImportError as exc:

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/__init__.py:1197, in check_pandas_support(caller_name)

1196 except ImportError as e:

-> 1197 raise ImportError("{} requires pandas.".format(caller_name)) from e

ImportError: `fetch_openml` requires pandas.

The above exception was the direct cause of the following exception:

ImportError Traceback (most recent call last)

Cell In[6], line 3

1 from sklearn.datasets import fetch_openml

----> 3 X, Y = fetch_openml('mnist_784', return_X_y=True, parser='auto')

4 X.shape, Y.shape

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/utils/_param_validation.py:214, in validate_params.<locals>.decorator.<locals>.wrapper(*args, **kwargs)

208 try:

209 with config_context(

210 skip_parameter_validation=(

211 prefer_skip_nested_validation or global_skip_validation

212 )

213 ):

--> 214 return func(*args, **kwargs)

215 except InvalidParameterError as e:

216 # When the function is just a wrapper around an estimator, we allow

217 # the function to delegate validation to the estimator, but we replace

218 # the name of the estimator by the name of the function in the error

219 # message to avoid confusion.

220 msg = re.sub(

221 r"parameter of \w+ must be",

222 f"parameter of {func.__qualname__} must be",

223 str(e),

224 )

File ~/.pyenv/versions/3.10.12/lib/python3.10/site-packages/sklearn/datasets/_openml.py:1051, in fetch_openml(name, version, data_id, data_home, target_column, cache, return_X_y, as_frame, n_retries, delay, parser, read_csv_kwargs)

1045 if as_frame:

1046 err_msg = (

1047 "Returning pandas objects requires pandas to be installed. "

1048 "Alternatively, explicitly set `as_frame=False` and "

1049 "`parser='liac-arff'`."

1050 )

-> 1051 raise ImportError(err_msg) from exc

1052 else:

1053 err_msg = (

1054 f"Using `parser={parser_!r}` requires pandas to be installed. "

1055 "Alternatively, explicitly set `parser='liac-arff'`."

1056 )

ImportError: Returning pandas objects requires pandas to be installed. Alternatively, explicitly set `as_frame=False` and `parser='liac-arff'`.

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[7], line 4

1 import matplotlib.pyplot as plt

2 import numpy as np

----> 4 X = X.astype(float).values / 255

5 Y = Y.astype(int).values

7 def plot_digits(X, y_true, y_pred=None, n=4, random_state=123):

NameError: name 'X' is not defined

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[8], line 1

----> 1 plot_digits(X, Y, random_state=12)

NameError: name 'plot_digits' is not defined

Q. What type of data is MNIST dataset?

One-hot encoding#

Before feeding categorical data into machine learning models, we need to convert them to a numerical scale. The standard way to do it is to use a one-hot encoding, also called a dummy encoding.

If a feature belongs to the final set \( \{1, \ldots, K\}\), it is encoded by a binary vector

Thus each categorical variable, which takes \(K\) different values, is converted to \(K\) numeric variables.

Note

In fact, it is enough to have \(K-1\) dummy variables since the value of \(\delta_K\) can be automatically deduced from the values of \(\delta_1, \ldots, \delta_{K-1}\).

Feature matrix#

A tabular numerical dataset can be represented as a feature matrix (or design matrix) \(\boldsymbol X\) of shape \(N\times D\) where

\(N\) — number of samples (rows)

\(D\) — number of features (columns)

Each sample \(\boldsymbol x_i\) is therefore represented by \(i\)-th row of the feature matrix \(\boldsymbol X\).

Important

A sample \(\boldsymbol x_i\) is a row vector with \(D\) coordinates. However, in linear algebra a vector is by default a column vector. That’s why in vector-matrix operations a training sample is often denoted as \(\boldsymbol x_i^\top\) to emphasize that it is a row.

TODO

Give other examples of datasets

Investigate the type of data in them (all columns of

irisdataset are numerical continuous, but this isn’t always the case)Describe the ways of fetching datasets in Python

Add info about image and text datasets (see also [Murphy, 2022], pp. 19—22)

Add more visualizations and quizzes